Innovative Approaches to RAG: Embracing Full-Document Retrieval

Written on

Chapter 1: Introduction to RAG

Retrieval Augmented Generation (RAG) combines the capabilities of large language models (LLMs) with external knowledge bases. Traditionally, this method involved segmenting documents into smaller parts to identify the most relevant information for addressing user queries. However, a new paradigm is gaining traction: using entire documents directly in LLM prompts, thus simplifying the process.

LlamaIndex, a premier framework for constructing RAG pipelines, is spearheading this transformation. Recent enhancements in LlamaIndex, along with the development of long-context models and decreasing computational expenses, have made full-document retrieval not only feasible but also a preferred method. This technique proves particularly beneficial for tasks like summarization, where maintaining the original context of a document can yield superior outcomes.

Chapter 2: Definitions and Concepts

RAG (Retrieval Augmented Generation): A strategy for enhancing LLM outputs with information from external sources or document collections.

Chunking: The process of dividing a document into smaller sections for easier access and processing.

Full-Document Retrieval: An approach that retrieves an entire document as a single unit for LLM input.

LlamaIndex: A versatile and robust framework for developing RAG pipelines.

Long-Context Models: Variants of LLMs capable of handling significantly longer input sequences.

Benefits of Full-Document Retrieval

- Context Preservation: Utilizing full documents ensures that the LLM retains the entire context of the source material, resulting in more accurate and nuanced responses, especially for summarization tasks.

- Streamlined Pipelines: Skipping the chunking process simplifies the pipeline, potentially enhancing the speed and efficiency of RAG implementations.

- Cost Reduction: As long-context models become more cost-effective and efficient, full-document retrieval emerges as a budget-friendly option.

Chapter 3: Implementing RAG with Full-Document Retrieval

Let's explore how to implement RAG using full-document retrieval alongside auto-retrieval for files. The following steps outline the process:

Option I: RAG with Full-Document Retrieval 1. Install Required Libraries and Download Files !pip install llama-index !pip install llama-index-core !pip install llama-index-embeddings-openai !pip install llama-index-question-gen-openai !pip install llama-index-postprocessor-flag-embedding-reranker !pip install llama-parse

# Download documents # Additional download commands...

2. Initialize API and Index import os import nest_asyncio nest_asyncio.apply()

from llama_index.indices.managed.llama_cloud import LlamaCloudIndex

os.environ["LLAMA_CLOUD_API_KEY"] = "your-api-key" os.environ["OPENAI_API_KEY"] = "your-openai-key"

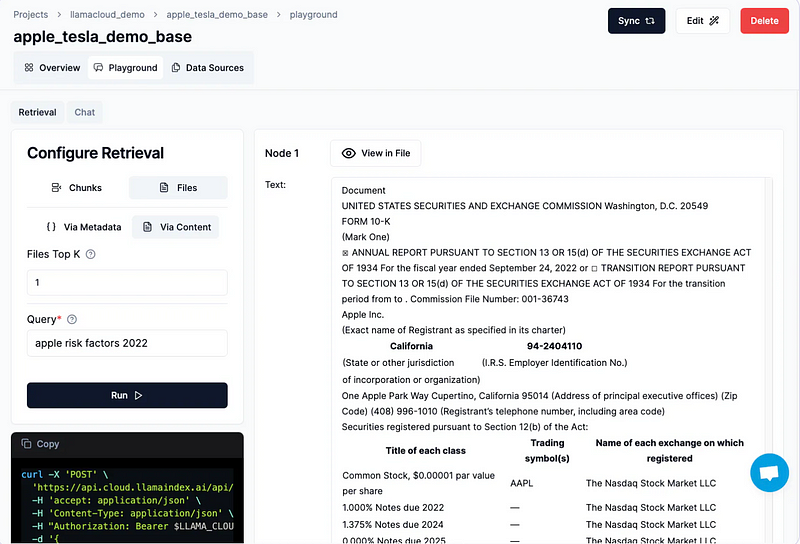

index = LlamaCloudIndex(name="apple_tesla_demo_base", project_name="llamacloud_demo", api_key=os.environ["LLAMA_CLOUD_API_KEY"])

3. Define File Retriever from llama_index.core.query_engine import RetrieverQueryEngine from llama_index.llms.openai import OpenAI

doc_retriever = index.as_retriever(retrieval_mode="files_via_content", files_top_k=1) llm = OpenAI(model="gpt-4o-mini") query_engine_doc = RetrieverQueryEngine.from_args(doc_retriever, llm=llm, response_mode="tree_summarize")

This video discusses the process of building a production RAG system over complex documents, providing insights into the methodology and applications.

4. Define Chunk Retriever chunk_retriever = index.as_retriever(retrieval_mode="chunks", rerank_top_n=5) llm = OpenAI(model="gpt-4o-mini") query_engine_chunk = RetrieverQueryEngine.from_args(chunk_retriever, llm=llm, response_mode="tree_summarize")

5. Build an Agent from llama_index.core.tools import FunctionTool, ToolMetadata, QueryEngineTool from llama_index.core.agent import FunctionCallingAgentWorker

doc_metadata_extra_str = """Each document represents a complete 10K report for a given year (e.g. Apple in 2019). """

tool_doc_description = f"""Synthesizes answers by utilizing an entire relevant document. Ideal for higher-level summarization queries. """

tool_chunk_description = f"""Synthesizes answers by using a relevant chunk. Best for specific queries requiring detailed responses. """

tool_doc = QueryEngineTool(query_engine=query_engine_doc, metadata=ToolMetadata(name="doc_query_engine", description=tool_doc_description)) tool_chunk = QueryEngineTool(query_engine=query_engine_chunk, metadata=ToolMetadata(name="chunk_query_engine", description=tool_chunk_description))

llm_agent = OpenAI(model="gpt-4o") agent = FunctionCallingAgentWorker.from_tools([tool_doc, tool_chunk], llm=llm_agent, verbose=True).as_agent()

response = agent.chat("Tell me the revenue for Apple and Tesla in 2021?")

In this video, three methods for semantic chunking are explored to enhance RAG performance, focusing on their effectiveness and applications.

Conclusion

The advent of full-document retrieval represents a significant advancement in RAG. With frameworks like LlamaIndex enabling this shift, along with the growing accessibility of long-context models and lowered computational costs, this method is poised to revolutionize RAG applications. By maintaining document integrity, simplifying processes, and reducing expenses, full-document retrieval is an essential tool for maximizing the capabilities of LLMs.

Resource: Dynamic Retrieval with LlamaCloud

Stay connected and support my work through various platforms: Github, Patreon, Kaggle, Hugging-Face, YouTube, GumRoad, Calendly

If you appreciate my content, consider buying me a coffee!

For inquiries or project ideas, feel free to reach out. Your feedback and support encourage me to continue creating quality content!