# Understanding Explainable AI: The Need for Clarity in AI Decisions

Written on

Chapter 1: Introduction to Explainable AI

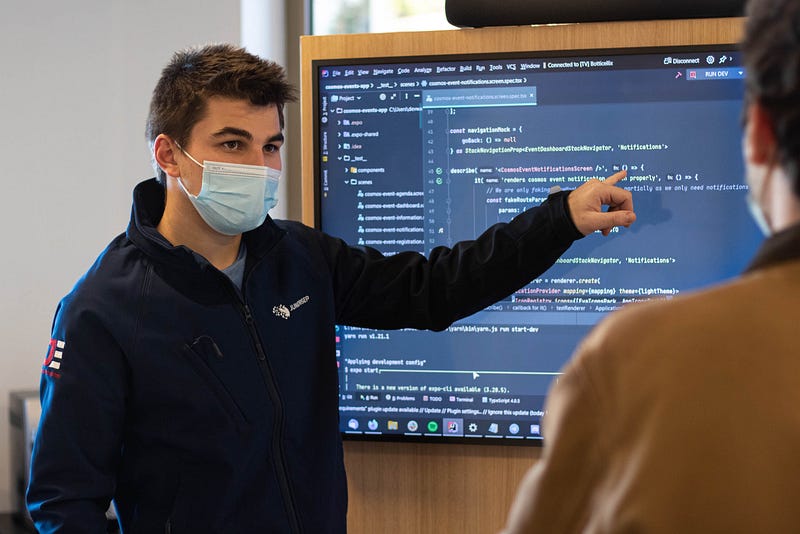

Can we truly make sense of AI? This question leads us to the realm of Explainable Artificial Intelligence (xAI), which aims to clarify AI's decision-making processes for human understanding.

In my previous role at IBM, I oversaw an AI initiative for a banking institution. During our final presentation to the steering committee, I proudly showcased our model that boasted a 98% accuracy rate in detecting fraudulent transactions. However, my confidence waned when an executive inquired, "This looks impressive, but how does your AI determine fraud?" My attempt to explain our use of an artificial neural network was met with confusion and concern. This experience highlighted the pressing need for AI to be interpretable and reliable.

Chapter 2: What is Explainable AI?

Explainable AI encompasses methodologies that promote transparency in AI operations, allowing users to comprehend and trust AI decisions. This is essential in contrast to the “black box” nature of many machine learning systems, where even the developers struggle to interpret how decisions are made.

The objective of xAI is to foster trust in AI applications by providing insight into the processes behind their outputs, countering the trend of developing opaque models.

Chapter 3: The Human Aspect of Decision-Making

Understanding our own decision-making processes can be complex. For instance, when ordering at a café, how do we explain our choices compared to friends? Despite the intricate biological signals involved, often, we are unable to articulate our reasoning clearly. This complexity underscores the importance of making AI decisions understandable, especially when they impact people's lives.

Chapter 4: Why Explainability Matters

The urgency for explainability arises particularly in scenarios where AI systems influence significant life choices. The ability to scrutinize and comprehend the rationale behind AI decisions is crucial for accountability and improvement.

xAI serves to uncover the underlying factors that contribute to AI decisions, enabling stakeholders to effectively challenge and refine these models. Understanding not just the "what," but the "why" and "how," is essential for fostering trust.

The first video titled "Introduction to Explainable AI (XAI)" discusses the foundational concepts of explainable AI, exploring interpretable models and methods for enhancing transparency in AI systems.

Chapter 5: Challenges in Explainable AI

Two primary challenges persist in the realm of xAI: defining the concept clearly and balancing performance with explainability. Companies may face dilemmas when required to disclose intricate details about their algorithms, potentially endangering intellectual property rights.

Moreover, finding the right balance between an AI system's effectiveness and its transparency remains an ongoing debate.

The second video, "Explainable AI explained! | #1 Introduction," provides an overview of the significance of xAI in today's AI landscape, emphasizing its role in fostering trust and understanding.

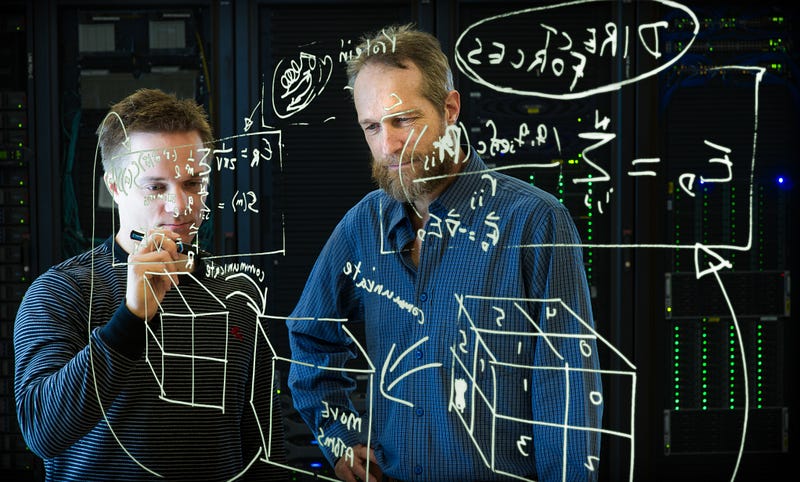

Chapter 6: Peering into the Black Box

The OECD's principles for AI stress the necessity of transparency and responsible disclosure in AI systems. While research strives to demystify AI, the processes behind its decisions often remain elusive.

Recent advancements indicate that some AI algorithms may operate in ways akin to human reasoning, enhancing our understanding of their functions.

Chapter 7: Future Directions in Explainable AI

Significant efforts are underway to clarify AI's decision-making process. Research initiatives, such as those at the University of South Carolina, aim to develop AI that can elucidate its conclusions, using examples like the Rubik’s Cube to illustrate problem-solving paths.

Understanding AI is becoming increasingly critical as it integrates deeper into our daily lives, impacting areas like transportation, healthcare, and more.

Conclusion

As AI continues to evolve and influence various domains, the demand for transparency and understanding grows stronger. Explainable AI represents a vital field that seeks to bridge the gap between complex AI systems and human comprehension.

By fostering trust and providing clarity, we can ensure AI serves humanity effectively and ethically.

Key Points to Remember

- xAI encompasses methodologies that enhance transparency in AI systems.

- Understanding the rationale behind AI decisions is vital for accountability.

- Two main challenges in xAI are defining the concept and balancing performance with explainability.

- Continued research aims to clarify AI's decision-making process.

For further reading, explore these resources:

If you wish to further enhance your knowledge, check out this list of over 60 free courses on AI, Machine Learning, and Data Science, designed to propel your learning journey.