# AI's Journey in Minecraft: Steps Toward Open-Ended Intelligence

Written on

Chapter 1: The Challenge of Open-Ended AI

OpenAI has made significant strides in fields such as language processing with GPT-3 and image generation with DALL·E 2. However, the organization now faces an even more formidable challenge: enabling AI to engage in open-ended actions. In the pursuit of artificial general intelligence (AGI), it's evident that language and visual capabilities alone are insufficient. While GPT-3 and DALL·E 2 demonstrate remarkable proficiency within their specific domains, they remain limited by the constraints of their virtual environments.

One of the early adopters of GPT-3, Sharif Shameem, discovered that GPT-3 could be prompted to tackle coding challenges, which led to the development of Codex, now integrated into GitHub Copilot. He also explored other tasks, such as attempting to have GPT-3 purchase AirPods from Walmart. Despite its progress, GPT-3 ultimately fell short of completing the task, illustrating that while language is a powerful tool, it has its limitations.

The shortcomings of GPT-3 highlight the need for a different kind of intelligence—one that can navigate complex, real-world scenarios. This is where OpenAI is focusing its efforts, starting with teaching an AI to play Minecraft by learning from human behavior.

Chapter 2: Minecraft as a Learning Platform

Minecraft, being an open-world game, allows players to undertake a myriad of actions without following a strict path. This flexibility, combined with its accessibility, has contributed to its status as the most popular game globally. The vast amounts of gameplay available on platforms like YouTube present a rich dataset for training AI. However, these videos do not typically offer detailed explanations of the actions performed; they only showcase the results.

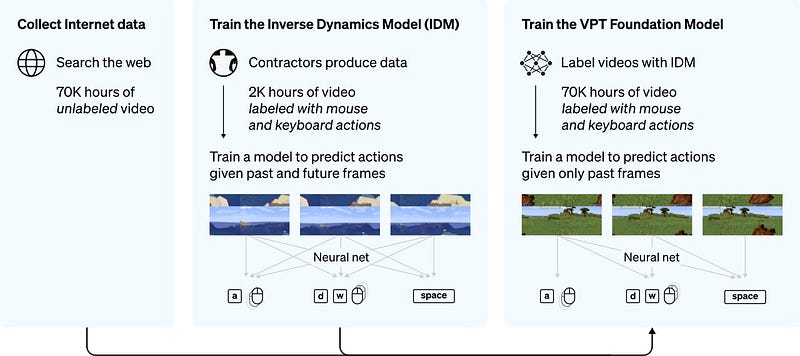

OpenAI recently introduced a method called Video PreTraining (VPT) as a solution. This "semi-supervised imitation learning method" leverages gameplay videos to teach AI how to perform various tasks in Minecraft. The learning process is divided into two main stages, which have proven to yield better outcomes than a single, integrated approach.

First, researchers train a neural network known as the inverse dynamics model (IDM) on a smaller dataset (2,000 hours of Minecraft footage with action-command labels). This model learns to anticipate actions based on both previous and future context—essentially predicting outcomes based on visual cues.

In the second phase, IDM labels the remaining 70,000 hours of video, allowing the VPT foundation model to learn from a larger dataset. This enables VPT to predict forthcoming actions based on what it has previously observed, a process referred to as "behavioral cloning."

Chapter 3: Breaking New Ground with VPT

Through this innovative approach, VPT has acquired the ability to perform basic actions in Minecraft, such as swimming, eating, and hunting—tasks that were previously unattainable with traditional reinforcement learning methods. Open-ended environments, like Minecraft, present challenges that reinforcement learning (RL) strategies struggle to navigate due to their unpredictable nature.

Furthermore, VPT can be fine-tuned with videos of specific tasks, enhancing its ability to master complex actions like constructing a house. OpenAI has also demonstrated that VPT can serve as a useful foundation for RL methods, as it mimics human behavior more effectively than random action inputs.

In a nod to the growing importance of open-source initiatives in AI, OpenAI has released the labeled data, code, and model weights to facilitate further research.

Chapter 4: Limitations and Future Directions

Despite its advancements, VPT is still a far cry from achieving human-like intelligence. There are significant differences between VPT's capabilities and human cognition that highlight the distance we must travel to reach AGI.

Imitation vs. Original Action

Humans learn not just by observing but by engaging in actions themselves, refining their skills through interaction with the environment. While VPT uses behavioral cloning to replicate actions, it lacks the intrinsic motivation and exploration that drive human learning. Unlike VPT, humans learn dynamically by experimenting and adapting to new information.

Virtual vs. Physical World

The limitations of VPT in a virtual setting mirror the challenges faced by self-driving cars in the real world. Both are designed to navigate their respective environments, but the complexity of human interaction and decision-making far exceeds what current AI can achieve. VPT may learn commands and execute them sequentially, but it cannot comprehend the intricate, multifaceted nature of human actions in the physical world.

Prediction vs. Understanding

While VPT can perform tasks like building structures, it does not grasp the underlying significance of these actions. Human actions are driven by intention and broader goals, which remain obscured from VPT's learning process. For instance, players build shelters in Minecraft not just for the sake of building but to survive and thrive in the game.

As OpenAI continues to chart the path toward AGI, each advancement invites deeper analysis and reflection, reminding us of the vast journey still ahead.

Subscribe to The Algorithmic Bridge for insights into AI's impact on daily life.

You can also support my work on Medium directly and gain unlimited access by becoming a member through my referral link! :)